Kubernetes – A Mini Data Center

Kubernetes is inevitably taking over the traditional concept and function of data centers, which was established in the era when all monolithic softwares depended upon the operating system. Data centers used to be places where all our information, files and records would be kept and controlled. However, nowadays that is not the case. More and more companies are moving to cloud and developers have been using containerization and microservices to build modern cloud-native applications, as a result of which, infrastructure strategies are taking take a more hybrid approach, integrating on-premises, colocation, cloud, DevOps and edge delivery options.

It wouldn’t be wrong in saying that, together, the combination of Kubernetes, DevOps and microservices is making IT more agile and better able to deliver what the business wants.

In this blog, we will explain what Kubernetes is, take a close look at how it can serve as mini data centers, and ultimately how it can facilitate the versatile application support that can cut down on hardware costs and lead to more efficient architecting in a business.

What is Kubernetes?

For the past few years, since Docker and various other containerization platforms came into existence, the containers were managed through scripts or self-made tools, but as the complexity and number of containers increased, these techniques were insufficient, thus such container orchestration technologies were needed. Kubernetes or k8s is one such solution. Kubernetes is an open-source container orchestration system for automating deployment, scaling and management of containerized applications. It was originally designed by Google and now maintained by the Cloud Native Computing Foundation.

Kubernetes enables users to manage containers as a single system rather than managing them individually. Kubernetes clusters can run anywhere: on premises, public cloud, or a hybrid cloud environment, making it easy to extend application portability across multiple environments.

Benefits of Kubernetes

Kubernetes’s architecture is designed in a way, to provide high availability, meaning it ensures that no downtime and the applications are running within the containers are always accessible. Even if a container does go down, another container, an exact copy except for its IP immediately takes its place without the user ever noticing. It provides scaling for applications, assuring excellent performance, resulting in a shorter reaction time and the applications loading faster. Another major feature of K8s is that it caters to disaster recovery; for example, if infrastructure has some problems like data loss, the K8s has a mechanism to pick up the data and restore it to its latest state.

[Learn More: Disaster recovery via the DevOps approach]

Architecture of Kubernetes

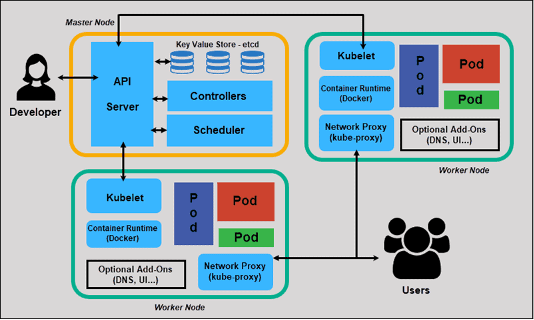

The Kubernetes Architecture is comprised of clusters, nodes (master and worker), pods, and a virtual network. Clusters are the most fundamental blocks of the architecture; they represent a single compute host and are made up of nodes. Each cluster is made up of several worker nodes that install, operate, and administer containerized applications, as well as a master node that supervises and manages the worker nodes. As can be seen in figure I, the master node has important Kubernetes processes running on it, to ensure proper running and working of the cluster. The API server is a container that acts as the door to the Kubernetes cluster; all clients and internal system components interact with the cluster through it. The controller acts as the manager, it keeps an overview of what is happening within the cluster, whether something needs to repair or removed or restarted, etc. The scheduler schedules the containers in different worker nodes based on the workload and available server resources on each worker node. The key-value store – Etcd is a database used to store the current states of the nodes, containers – the whole cluster. It has the configuration data, as well as the status data in it. The disaster recovery is done through this database.

On the other hand, the worker nodes contain kubelet, a Kubernetes process that allows the worker node to interact with other nodes; it monitors the API Server for tasks, executes them, and reports back to the master node. It also keeps an eye on pods and alerts the control panel if one isn’t working properly. The worker nodes also contain Pods, the smallest element of scheduling in Kubernetes. It serves as a wrapper for a single container with the application code. The Kube-proxy present in the worker nodes is a network proxy that allows communication to these Pods from network sessions inside or outside of the cluster. The virtual network is represented with black arrows in figure I and its main function is to allow the master and worker nodes to interact with each other.

Environments

Kubernetes offers a variety of cluster environments, like production, learning, development, or test. All these require varying levels of planning, preparation, and requirements. A production environment may need secure access, constant availability, and the capacity to react to changing needs; whereas, in a development environment priority may be on development modes like pure off-line, proxied, live, or purely online. When a company shifts to Kubernetes, it initially uses it in a development or test environment, which is relatively ‘easy’ in terms of deploying a container and has different requirements; this results in the company misunderstanding their handle on the environment for operational needs. While working in a development or test environment, an engineer does not have to care for open network connections or a cluster going down, but once in production, business risks are significantly higher because of downtime and a bigger attack surface. Similarly, once a business moves to production, the data produced is so much more massive in volume and quantity that it requires monitoring to scale with the data.

[Learn More: Monolithic Vs Microservices Architecture]

How Kubernetes Mini Data Centers Are Changing The Traditional Approach To Cloud Computing

This concept of microservices architecture has been acknowledged as a driver for more efficient utilization of processing power in data centers and Kubernetes is one of the leading tools in the data center consolidation market. It helps to set up, deploy, and manage containerized applications across clusters of hosts. And it does so without the need for any manual operations or specialized expertise. The goal of data center consolidation is to make IT more efficient. This means that data centers are used for their primary purpose (processing and storage) and not for other things like server rooms, conference rooms, or private offices. Datacenter consolidation is inevitable because of the emergence of Infrastructure as a Service (IaaS) providers like AWS, Azure, and Google Cloud Platform. These services compete with traditional on-premises solutions by offering lower costs and scalability.

Needless to say, the containerization revolution has led to more energy-efficient data centers. Similar to how we can get more out of a single server chip than before, we can also do it by minimizing the data centers that house those servers. There are many reasons to consider adding a Kubernetes Mini Data Center to your data center. The most compelling of these are the security, scalability, and flexibility advantages it provides. A Kubernetes Mini Data Center is a more secure, scalable, and flexible way of running your databases, which means you can use it for any size of business and never worry about not having enough resources to meet your needs. For container orchestration, Kubernetes has become the default standard for developers, but it has also been proven as the strongest with application workloads alone, as it can provide that operating system for workloads to exist across multiple locations.

The Future of Cloud Computing Is Here & It’s Converging with Containers & Orchestration

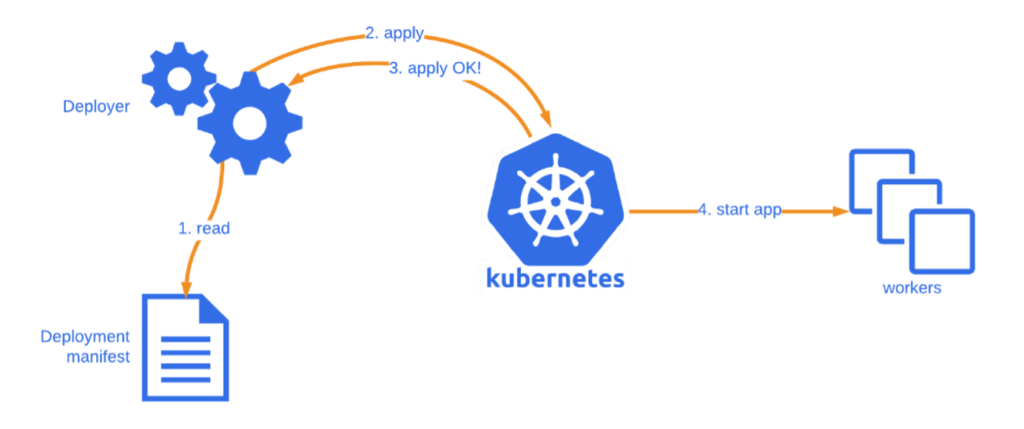

Deploying a Kubernetes cluster is not a very difficult task. The required knowledge is usually quite easy to acquire, and the process of deploying the cluster is not at all complicated. There are a lot of use cases for Kubernetes and it is becoming more and more popular among developers. It is very scalable and can be used in different environments like public clouds, private data centers, and hybrid environments. Kubernetes also has a great community that continuously improves the product by adding features to it. It also solves the problems of traditional systems like scaling, updating apps without service interruption and handling failures among other things.

In a world where technology is changing at a rapid pace, this landscape can be difficult to navigate. Kubernetes, microservices and DevOps offer a variety of benefits for any organization looking to modernize its IT infrastructure.

We should end by saying that Kubernetes is worth the investment!

Contact us if you need help or guidance to implement Microservices and Kubernetes in your organization.

Find out more: info@planetofit.ca

By: Bakhtawar Shafqat